Human-Machine Teamwork

IHMC researchers are working on advancing autonomous capabilities of Robots, Software Agents and Artificial Intelligence with the aim of creating more effective machine teammates. The view of robots as teammates has grown as the field of robotics has matured. The future will belong to collaborative or cooperative systems that do not merely do things for people, “autonomously,” but that can also work together with people, enhancing human experience and productivity in everyday life.

IHMC’s research on Human-Machine Teamwork involves:

- Understanding Teamwork

- Analyzing Teamwork

- Building Systems that Team Effectively

- Measuring Team performance

- Learning How to Team

Understanding Teamwork

The essential starting point for human-machine teamwork is understanding teamwork. While most people have an intuitive sense of what teamwork is, it is surprisingly difficult to define it concisely. Matthew Johnson’s thesis workproposed teamwork is about managing interdependence between team members. His approach, called Coactive Design[1], was developed to address the increasingly sophisticated roles that people and robots play as the use of robots expands into new, complex domains. The term “coactive” was coined as a way of characterizing the activity. Consider an example of playing the same sheet of music as a solo versus a duet. Although the music is the same, the processes involved are very different. The duet requires ways to support the interdependence among the players. This is a drastic shift for many autonomous robots, most of which were designed to do things as independently as possible. The fundamental principle of Coactive Design is that interdependence must shape autonomy [2] — that independent operation via autonomy is a stepping stone to the larger goal of interdependent collaboration with teams of people and machines. Coactive Design’s goal is to help designers identify interdependence relationships in a joint activity so they can design systems that support these relationships, thus enabling designers to achieve the objectives of coordination, collaboration, and teamwork.

Coactive Design provides a framework for a more principled exploration of the human-machine design space. It guides the design of both the autonomy and the interface, and provides insight into the impact a given change in autonomy may have on overall system performance. Coactive Design identified three key interdependence relationships: observability, predictability, and directability. These three relationships are foundational interdependencies essential in the design of most systems and are the basis for more complex concepts such as explainability and trust. To help understand the perspective, we have developed a Coactive Design introductory tutorial on the theory and fundamental principles.

The Coactive Design method has proved useful in domains such as unmanned aerial vehicles (UAVs), unmanned ground vehicles (UGVs) and software agents for cybersecurity. Our biggest success story has been IHMC’s participation in the DARPA Robotics Challenge (DRC). The DRC was an international robotics competition aimed at developing robots capable of assisting humans in responding to natural and man-made disasters. It included more than 50 teams competing in three phases: The Virtual Robotics Challenge (VRC), the Trials and the Finals. The tasks were selected by DARPA for their relevance to disaster response. IHMC Placed 1st in the VRC, 2nd in the Trials and 2nd in the DRC Finals. Our success was not based on flawless performance, but on resilience in the face of uncertainty, misfortune, and surprise. This was achieved through robust autonomous capabilities that were designed to work effectively with people using Coactive Design.

Analyzing Teamwork

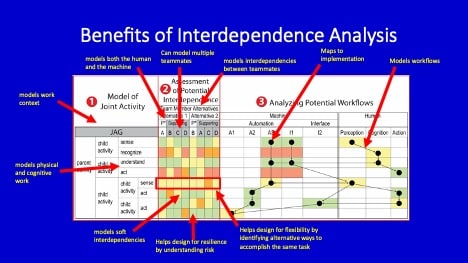

As any craftsman knows, having the right tool for the job makes all the difference. Sadly, adequate tools are lacking for the design of sociotechnical systems. The most widely known approach, which underpins many current efforts to build intelligent machines, is known as “levels of automation” (LOA). As the design of increasingly intelligent machines has brought new issues and options in human–machine interaction and teamwork to the fore, we, along with other researchers, have increasingly expressed serious misgivings about the LOA-based approach [2] [3]. We have developed an alternative analysis approach to conducting analysis and an associated tool, called the Interdependence Analysis Table [1][4][5], to identify and understand the interdependence within an activity for a given team. This tool supports the systematic Coactive Design method. We have developed an Interdependence Analysis introductory tutorial on the tool and its application.

Building Systems that Team Effectively

With a proper understanding, the next challenge is to build effective human-machine systems. For decades, engineers have used the same methods (e.g. behaviors, state machines, etc.) to build systems that team. However, all of these were developed to produce single agent behavior. We propose a better approach is to start by designing all work to be joint work from the start. Matthew Johnson and his colleague Micael Vignati have developed Joint Activity Graphs (JAGs) [6][7] as a tool to help understand, model, and implement effective team behavior. We have developed an Joint Activity Graph introductory tutorial.

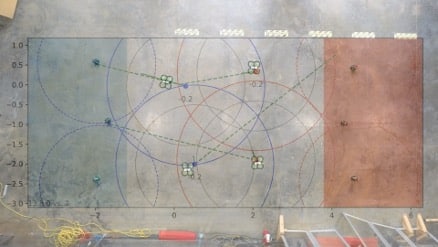

JAGS have been used to drive automated teammates’ behavior to produce effective machine-machine team behavior. This was demonstrated on various projects, including DARPA Context Reasoning for Autonomous Teaming (CREATE). The goal of CREATE was to develop distributed machine teamwork that was as effective as centralized control. We demonstrated complex dynamic team behavior in a Capture-the-Flag style activity. An advantage of our approach is it is inherently scalable because it models joint activity without assumptions about the team engaging in the activity. We have demonstrated scaling teamwork in the same domain without needing to change any code. We have also demonstrated transitioning our simulated results onto physical hardware.

When working with people, you cannot drive their behavior through automated algorithms. However, to be a good teammate, a machine will need to understand those it is working with. In this case, instead of using JAGs to drive agent behavior, we invert them and use them to model the people on the team. JAGs can be used to track and predict human teammates’ behavior to make more informed agent decisions with respect to team performance. We demonstrated this as part of DARPA Artificial Social Intelligence for Successful Teams (ASIST). The goal of ASIST was to develop AI agents capable of monitoring a team of humans and providing advice to improve team performance. We developed JAG-based analytic tools to track and monitor the human team member’s activity. These tools provided awareness of the teaming context need for an AI agent to provide support.

Measuring Team Performance

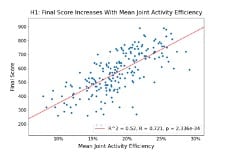

Though significant research effort has been focused on measuring team performance, it remains an open challenge that is critical to interpreting the effectiveness of any human-machine team. Johnson and Vignati have instrumented JAGs to not only track team behavior, but measure team performance through a novel measure called Joint Activity Efficiency (JAE). The basic idea is assessing how efficiently the team, not just individual members, is performing the joint work. To measure JAE we must first define a few constructs.

Activity duration is the time any team member becomes aware of an activity until the activity is completed. It can be thought of as the range of an activity. Since team members can start tasks and then break off to do other things before returning to complete a task, this includes time periods where the team member may not be actively working on the activity because they are engaged in another activity. This would not distinguish inefficiency (slower work on same task) from delayed work (working on a different task). Thus, we do not use activity duration, but define it here to distinguish it from the measure we did use.

Alternatively, active duration is the time within the activity duration when team members are actively working on the activity. This excludes time when players break off to do other things outside the scope (subtree of the joint activity graph) of the designated activity. To measure joint activity across the team, active duration is the sum of the active durations of all team members with respect to a given activity.

Estimated duration is a lower bound estimate of the optimal active duration necessary to complete an activity. It represents the shortest duration possible by any team member combination under ideal conditions for the given activity. Often, the estimated duration will not be achievable given the reaction time and relative positions of team members.

Joint activity efficiency is a ratio of the estimated duration to the measured active duration for a given activity:

![]()

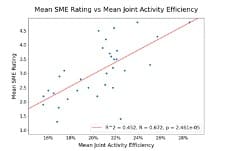

If JAE is 1.0, then the team was perfectly optimal in their efficiency. If JAE is less than 1.0, then there was some inefficiency. If tasks are independent, then there are no joint activity constraints. Under such conditions, a given team member could do the task themselves, thus the JAE would represent the individual efficiency at the activity. This is a degenerate case. When there are joint activity constraints and teammates must work together, JAE measures the team’s efficiency at the activity. JAE has proven to be an excellent predictor of team performance [8], correlating strongly with both score and subject matter expert ratings.

Learning How to Team

We have conducted some preliminary research in learning how to team. We demonstrated how actor-critic methods could be utilized to learn how to work as a team in the CREATE Capture-the-Flag domain. We also demonstrated how this approach is transferrable from simulation to hardware.